How Hazardous is Radon?

Today I would like to look at radon, a colorless and odorless radioactive and noble gas that is implicated as a major lung cancer hazard. How dangerous is it really, and what ought we do about it?

About Radon

Radon is element 86 of the periodic table. There are many known isotopes of radon, and three in particular occur naturally at significant quantities. Of those, radon-222 is the most prevalent. It is the most stable isotope with a half life of about 3.8 days. Confusingly, radon-222 has historically been called simply radon, since it was the first isotope discovered. Radon-222 is an immediate decay product of radium-226, which in turn is a decay product of uranium-238. Since U238 has a half-life of billions of years, radon is continually replenished in the natural world despite its short half life. The other naturally occurring radon isotopes are radon-219 (actinon) and radon-220 (thoron), on the decay chain of uranium-235 and thorium-232 respectively.

Historically, radon was used medically to treat cancer, though this use has been superseded by other treatments, and for various scientific purposes. Nowadays, radon is mostly regarded as a hazard rather than as a useful element.

The Health Hazards of Radon

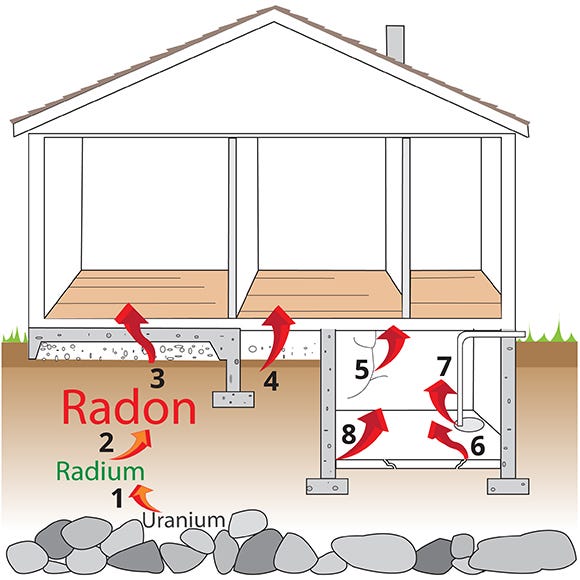

Radon-222 (henceforth referred to simply as radon for simplicity) is one of the heaviest gases in nature, and thus it tends to concentrate in basements. Circulation and venting can mitigate the concentration.

The health hazards of radon were first discovered among miners, as mines are another location for radon gas to accumulate, especially uranium mines. Several studies, such as this one and this one and this one, among others, have found elevated levels of lung cancer among uranium miners, attributed to radon. Higher rates of respiratory illness among miners in areas now known to contain radioactive minerals were identified in the 15th century, and higher rates of lung cancer were identified in 1879, well before radon itself was discovered.

Radon emits an alpha particle when it decays. When in the lungs, these alpha particles can trigger a series of biological reactions that lead to lung cancer. Further decay products of radon are solids with short half lives, and they too cause damage to the lungs.

A commonly quoted figure in the United States is that there are 21,000 lung cancer deaths in the United States every year that can be attributed to radon, which would make radon the second most severe source of lung cancer overall and the most severe source for non-smokers. The source of that figure is a 2003 study from the EPA. According to the study, radon exposure is synergistic with smoking, in that the lung cancer risk of smoking and radon exposure together is greater than the sum of the two risks individually. The study finds 18,900 lung cancer deaths from radon among smokers and 2900 among non-smokers.

There are problems with the study. Most obviously, it is outdated. The study itself is from 2003 and estimates lung cancers in 1995. Since 1995, smoking rates have fallen by about half, and so for that reason alone, it seems reasonable to reduce estimates of radon deaths by around 40%. Other issues are population growth and aging and better health care for cancer patients.

But the most worrisome aspect of the 2003 study is the risk model. The study derives risk from a 1999 study from the National Research Council called BEIR VI. When a patient is diagnosed with lung cancer, there are generally no biomarkers that indicate the source of the cancer, which could be smoking, radon, particulate matter, asbestos , or other carcinogens. Instead, we have to rely on indirect evidence to determine how much lung cancer can be attributed to radon.

Linear No-Threshold

If you follow issues related to the safety of nuclear power, you may have guessed where this discussion is headed.

Each of the three studies cited above about lung cancer among miners finds linearity between the level of radon exposure over time and the level of cancer risk. BEIR VI then extrapolates the linear relationship to radon levels found in homes.

A recent review of non-uranium Canadian mines finds an average radon concentration of 570 becquerels per cubic meter (Bq/m3). A becquerel is an SI unit that means one decay event per second. Thus in the average non-U Canadian mine, one can expect 570 radon decays per second in a cubic meter of air. Some mines show concentrations above 10,000 Bq/m3. This study finds 410,000 Bq/m3 in a closed Hungarian uranium mine. This study finds mines with concentrations above one million Bq/m3. This paper finds that concentrations above 10,000 Bq/m3 are not uncommon among non-uranium mines. At these high concentrations, radon is an easily measured health hazard, and studies such as the those cited above can measure the increased incidence of lung cancer among miners.

Even high-radon homes are orders of magnitude safer. The EPA has set an action level of 4 picocuries per liter, which is equivalent to 148 Bq/m3, at which they recommend mitigation of radon (sorry to those without scientific backgrounds for these non-intuitive units of measure). They recommend consideration of action at 74-148 Bq/m3. The average radon level in homes is about 48 Bq/m3, and the average outdoor radon level is around 15 Bq/m3.

Following the observations of linearity in the cited studies, BEIR VI, as well as more recent work such as that by the World Health Organization, extrapolate the risk of home and everyday environmental exposure linearly from the risks found in studies among miners. This is what linear no-threshold means.

LNT should challenge our intuition for how risk works. To give an example from Jack Devanney’s book, Why Nuclear Power has been a Flop, consider drinking a glass of wine every day for a year. We can debate the health risks of this, but unless a person is an alcoholic or has some kind of allergy, this habit is unlikely to cause serious health problems. On the other hand, consuming the equivalent of 365 wine glasses of alcohol in a single day will kill you. LNT is too simplistic a model for the risk of alcohol exposure. It may be too simplistic for radiation exposure.

It would be infeasible to attempt to address the controversy around LNT fully. In his book, Devanney offers some alternate risk models for low, chronic levels of radiation, and he discusses many lines of evidence against the validity of LNT. I don’t think the evidence favors LNT, but I also haven’t seen compelling evidence in favor of any different model. Specifically in the context of radon, Bernard Cohen has argued against the validity of LNT and for the hypothesis that the hazards from typical home radon levels are exaggerated. Cohen’s work, in turn, has attracted some criticism. More recent work has gone so far as to argue that radon exposure, within normal parameters, actually reduces the risk of lung cancer. Charles Saunders, in his book Radiobiology and Radiation Hormesis, argues against LNT and includes a chapter entitled “Wonderful Radon”.

I don’t think the published evidence is strong enough to make a strong conclusion one way or the other. However, despite this extensive digression into LNT, one should note that there is also direct evidence of the link between residential radon and lung cancer, and so we should not conclude that elevated levels of radon exposure are or might be harmless.

Radon Mitigation

Suppose now that we accept mainstream evidence of risk. What ought to be done about it? Home radon is typically mitigated through testing, and where radon levels are found to be dangerously high, through sealing of basements.

A vendor indicates that a professional test will set you back around $150-300, and mitigation around $800-1500. Or you can get this test kit for $9.99, plus a $20 lab fee, from Target.

Statistics from Minnesota show that just under 1% of households got their homes tested professionally in 2022, which is down 40% from 2020. For homes in Calgary that test above Canada’s action level of 200 Bq/m3, statistics are that 38% undergo mitigation. I wonder why someone would bother testing if they don’t intend to mitigate, but whatever. There aren’t many good national or worldwide statistics, but the few other regions I’ve looked at have similar numbers.

Several studies have calculated costs from testing and mitigation in terms of dollars per life saved, per lung cancer case prevented, or per life-year gained. We’ll rapid-fire through some of them. This study across the United States finds that testing isn’t very cost-effective. This study does find cost-effectiveness across Canada, but it uses what seems to me a suspiciously low discount rate of 1.5%. This study does not find cost-effectiveness in the UK. This study in the UK does find cost-effectiveness. This study in Canada finds cost-effectiveness in regions where radon levels are high. This study does not find cost-effectiveness in Sweden. This study finds cost-effectiveness in Germany.

Rates of remediation are key. These three studies find that the rates of remediation make a difference between cost-effectiveness and not.

Every study that I have found that compares remediation of existing homes and enhanced construction standards on new homes finds that the latter is more cost-effective. Examples include this one and this one and this one and this one.

Role for Government?

In the United States, government’s role for radon mitigation mainly occurs at the state level. There are limited federal resources, such as a recent program from the Department of Housing and Urban Development to test public housing in select areas.

To say that the government should spend more on testing, we need to identify where the market failure is. The health benefits of testing and mitigation largely accrue to the one doing the test, so there isn’t really an externality. This is true even if a homeowner sells, since either a favorable test or mitigation should be incorporated into the house price. In 1995, a study in Sweden derived willingness-to-pay for reduction of radon concentrations by examining property values and found a value of around $6000 (converting to USD and CPI-adjusting to 2024).

There might be an information market failure, in that most citizens do not know what the level of danger. There is certainly a lack of public understanding, and there is evidence that public notification policies increase the rate of testing.

But as discussed above, it does not appear that the government knows the level of danger either. It is reasonable to expect, at the very least, an update to the outdated 2003 study, if the EPA recommends that citizens spend billions of dollars on testing and mitigation. Given the uncertainties about radon risk, the mixed results on the cost-effectiveness of testing, and the lack of clarity about the market failures involved, it is hard to make the case that radon is a wise investment of public dollars.

Quick Hits

The BBC ran an article this week entitled, “Why we might never know the truth about ultra-processed foods”. The term “ultra-processed foods” was coined by Carlos Monteiro in 2009 in a four-level taxonomy of levels of food processing that has become popular. The truth is, though, much less mysterious than implied by the article. The British Nutrition Foundation observes that highly processed foods tend to be higher in salt, sugar, and fat, and thus processing is a proxy for negative health impacts, albeit a highly imperfect proxy. The level of processing is less transparent than the nutrition information that most national governments require foods to carry, and so as a proxy for healthiness, I am struggling to understand what use this proxy has. Monteiro’s taxonomy also carries the appeal to nature fallacy; people have a vague notion that “processed” means “unnatural”, and consequently “bad”.

Also last week, United Nations Secretary General Antonia Guterres called again for a faster fossil fuel phaseout among wealthy countries. Climate change has been a signature issue of Guterres’ tenure of office, but as I argued last week, such calls are not accompanied by reality-based thinking on the world energy system.

Energy efficiency investments often don’t play out as well in practice as they do in engineering analyses, often entailing various hidden costs in real life that simplified models overlook. This is why it is so important to be rigorous about what market failure a proposed government policy is intended to correct. From a few years ago, this paper on the poor performance of home weatherization programs is a prime example. This is a topic that I would like to explore some more later on.

Matthew Wald recently examined the EI Statistical Review of World Energy, as did I last week. Wald’s piece is worth a read, and there is a lot of analysis that I didn’t touch on in my post. Wald notes that renewable energy deployment is not keeping pace with overall energy demand, which is something that I noted, but I also considered several lines of evidence that overall energy demand is growing, at least in part, because of renewable energy deployment. As enthusiastic as I am for new nuclear power, I don’t expect that the story for nuclear will be any different, and there is simply no getting around that, if fossil fuel usage is to go down, there has to be some kind of carbon pricing.

After nearly 23 years, the government reached a plea deal with Khalid Sheikh Mohamed, the most prominent perpetrator of the September 11 attacks who is still alive. However, Defense Secretary Lloyd Austin revoked that deal on Friday, and the deal was widely panned across the political spectrum. Allegations of torture and problems with the military commission process, rather than a proper civil trial, have contributed to the case taking so long. It wouldn’t surprise me if KSM dies without the case being resolved. To date, Zacarias Moussaoui is the only person to have been convicted for a role in 9/11.

Garfield the cat and I share some political beliefs.