Scale and Existential Risk

As mentioned last time, I am switching to a biweekly post schedule for now because I can no longer keep up with the weekly schedule. That also gives me more time to think the posts through. But unfortunately, this one is not as well developed as I had hoped because I have been sick for the last several days.

Existential risk (x-risk) has been defined by Bostrom (2013) as follows.

An existential risk is one that threatens the premature extinction of Earth-originating intelligent life or the permanent and drastic destruction of its potential for desirable future development.

Today, I’ll look at some definitions and deeper expositions of topics, consider how risk might be communicated and classified, and then take a look at Trammell and Aschenbrenner (2024) on whether we should expect overall risk to increase or decline into the future.

Understanding Existential Risk

Under Bostrom’s definition, “existential” risk has a high bar. There are global catastrophic risks, such as defined by Currie and hÉigeartaigh (2018), which would cause serious harm on a planetary scale. There is not necessarily a universally agreed upon threshold on what constitutes a global catastrophe, though a common suggestion is that it entails at least a billion deaths. If so, then the worst catastrophes in modern times—World War II, the Spanish Flu, COVID-19 (12-29 million deaths according to data from the World Health Organization, analyzed by The Economist, and presented by Our World in Data), and the Great Leap Forward famine do not even come within an order of magnitude of comprising a global catastrophe. To find events that might qualify, we have to go back to the Middle Ages, and the Mongol conquests and the Black Death in particular, and even then they might qualify only because of the lower world population at the time.

An existential catastrophe, or at least the form that entails the extinction of humanity, is on the surface almost an order of magnitude worse than that. But Bostrom (2013) argues that even this grim picture does not come close to telling the whole story. That is because an existential catastrophe destroys not just all people who are alive today, but all people who might ever live in the future. Bostrom (2013) estimates that 10¹⁶ people who are more or less biologically human might live on Earth while the planet is habitable. If we consider the possibility of expansion into space—very reasonable on such a long time scale—then the possible number of future human lives is 10³²-10⁵², assuming 100 years per life.

(If anyone knows how to make inline superscripts look less funky, I would appreciate knowing. For a separate line equation, I can use Substack’s LaTeX editor, but I have not figured out a good solution for inline expressions.)

Bostrom (2013) posits the maxipok rule, which is that the morally best policy is the one that maximizes the probability of an OK (i.e. avoids existential risk) outcome. The argument is based on utilitarian reasoning and the premise that potential lives in the future should have the same moral weight as lives today. Both of these bases can be contested. For the latter, see the overview of Greaves (2017) on population ethics, or my post on the subject a couple of years ago.

It should also be noted that human extinction is only one of the four x-risks that Bostrom (2013) considers. The four are,

Extinction, as discussed above,

Permanent stagnation, in which human civilization lasts into the long term but at a technological level far below maturity,

Flawed realization, technological maturity that is irremediably flawed for some reason. The definition of flawed is subjective, and

Subsequent ruination, in which human civilization achieves technological maturity but is subsequently and permanently ruined.

There is also a notion of s-risk (suffering risk), a fate worse than death, which could be propagated at a cosmic scale. Discussing this concept would take me too far afield, so I’ll link to the Center for Reducing Suffering for further discussion.

Classifying and Communicating X-Risk

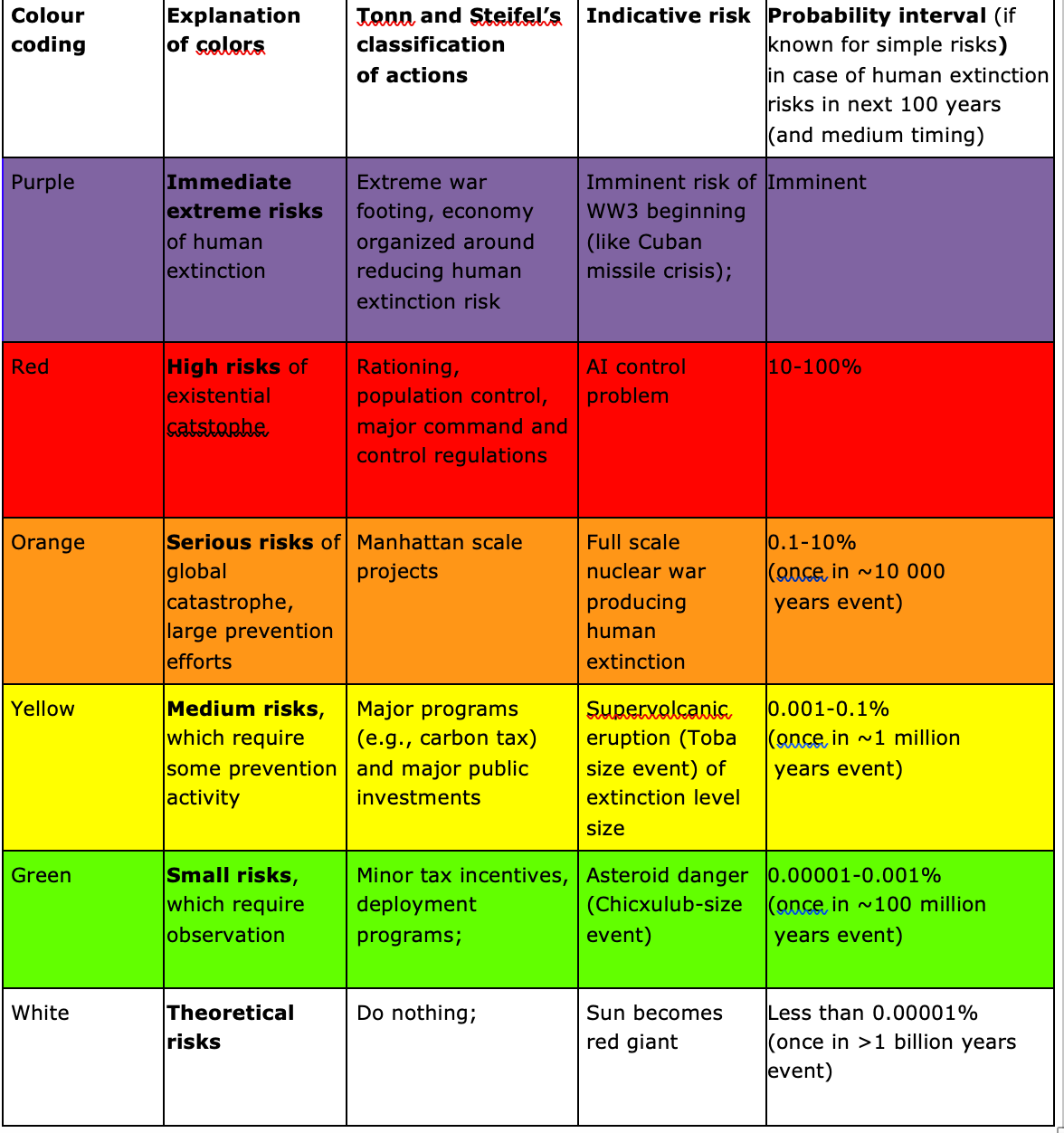

There are all sorts of things we can imagine, some more plausible than others, that might pose x-risk. Even building a complete list, let along assessing the danger of each item, is difficult. Turchin and Denkenberger (2018) have attempted to do so with a layperson-friendly color-coding scheme.

These indicative risks—which you can see belong entirely to the first of Bostrom’s (2013) four categories—are just a few of risks that can be imagined. Some other prominent risks include that of a pandemic—both natural and artificial—a runaway nanotechnology disaster (“grey goo”, as introduced by Drexler (1986)), and many others. You can really let you imagination run wild with this. From having read some material from the Effective Altruism community on x-risk, I get the impression that most practitioners in the field consider the artificial intelligence control problem to be the single most serious risk by far, and the next two most serious risks would be a deliberately bioengineered pandemic and nuclear war resulting in nuclear winter. I have written several times in the past on why I don’t consider AI risk to be as great as many x-risk researchers suggest or the safety measures proposed to be appropriate. I also considered two years ago why the nuclear winter scenario is implausible.

The purpose of Turchin and Denkenberger (2018) is not to analyze specific risks but to present a simple and unified method of communication that will help the public understand them. The system of six is meant to be analogous to several other hazard scales, such as the Palermo scale for the combined likelihood and hazard of an asteroid impact, as introduced by Chelsea et al. (2002), and especially the more coarse-grained, color-coded Torino scale for asteroid risk. They also refer to the doomsday clock of the Bulletin of the Atomic Scientists as a general agglomeration of existential risk.

When I read this paper, though, I was most reminded of the Homeland Security Advisory System. You guys remember the HSAS, right? That was the color-coded system, with five grades (though the lower two grades were never used), to assess the likelihood of a terrorist attack. The U.S. Department of Homeland Security maintained the system from 2002 to 2011, at which time it was it replaced by the National Terrorism Advisory System, which remains in effect. The Wikipedia article expounds greatly on the many problems that led to the HSAS’ replacement.

I can foresee many of those same problems with the proposed system of Turchin and Denkenberger (2018). Asteroid risk can be fairly reasonably be estimated and presented with the Palermo or Torino scales. Likewise, the volcanic explosivity index, the Richter scale for earthquakes, and the Saffir-Simpson scale for hurricane wind strength (categories 1-5) are all fairly objective. But at the present state of knowledge, there is simply no way to characterize x-risk in the same way.

An X-Risk Kuznets Curve?

This discussion can get depressing. Unless there is a civilizational collapse or long stagnation, hardly appealing scenarios themselves and forms of x-risk according to Bostrom (2013), then we might fear that advancing technology will continue to pose more and more x-risks, and at some point in the not-too-distant future, one of them will catch up with us. Such is the thesis of Rees’ (2003) Our Final Century.

But according to Trammell and Aschenbrenner (2024), this need not be the case. Since the first version of this paper was released in 2020, it has been highly influential in the x-risk community. The authors construct a model showing that a Kuznets-curve-like pattern may hold: x-risk increases up to some point, and then, either because technologies are inherently safer or because society is investing more into safety, x-risk should decrease to zero. This grants civilization a non-zero chance at long-term survival.

Trammell and Aschenbrenner (2024), following the terminology of previous authors, distinguish between state risks and transition risks. A state risk is one which applies for each unit of time at a fixed technology level. A transition risk is one posed by the invention of a new technology, and it disappears after the technology is established. They construct a model for the case in which only state risks apply, and one for the case in which which both state and transition risks apply.

First, Trammell and Aschenbrenner (2024) consider state risk only with no policy for risk reduction. They show that, contrary to the intuition of some, an acceleration of the development of technology generally reduces long-term risk, though it may induce short-term risk and lower civilization’s life expectancy. The intuition behind their result is that the total long-term risk can be calculated as the integral over all time of the risk at any given moment. An acceleration of technology—whether one-time or permanent—compresses the points in the x-axis of the curve and thus reduces the area under it. They show that a permanent acceleration of technology might even convert a civilization from one that is doomed to an eventual existential catastrophe to one that has a chance of surviving in the long term. These results require that the level of state risk for a technology level converges to zero as the level approaches infinite.

Next, Trammell and Aschenbrenner (2024) consider state risk with a deliberate policy of risk reduction. In this model, it is possible that a certain portion of resources that would otherwise to go consumption are instead spent on risk reduction. In this case, unlike the previous, risk grows endlessly with advancing technology in the absence of any mitigation. The risk level has constant elasticity—that it, it varies with a fixed percentage—with respect to both the technology level and the mitigation spending. If the latter elasticity is greater than the former elasticity, then it is possible to invest greater amounts of spending in safety over time while maintaining a positive probability of long-term survival. Otherwise, it is impossible.

Finally, Trammell and Aschenbrenner (2024) broaden the model to include transition risk as well as state risk. With this, they find that it is necessary for the elasticity with respect to risk mitigation spending to exceed the sum of the elasticities with respect to state risk resulting from advancing technology and the transition risk at a given time. Though this condition is more stringent, a result holds in all cases that a technology acceleration improves the likelihood of the civilization’s long-term survival, and it may even grant a chance of long-term survival to a civilization that would otherwise have none.

Trammell and Aschenbrenner (2024) observe that, in a civilization that achieves long-term survival, existential risk must converge to zero, which implies that there is a peak and decline. They analogize this phenomenon to the environmental Kuznets curves of Stokey (1998), which posits that wealthier societies are willing to spend more on mitigation of environmental problems, which leads to an observed peak and decline in many environmental impacts. Trammell and Aschenbrenner (2024) does have some limitations. It treats technological development as monolithic, and it does not address the possibility that which technologies are developed at a particular time can be subject to policy. The models are also very theoretical in nature rather than empirical, and so it is unclear how much correspondence they have to real-life data.

Quick Hits

In light of recent events, it would be worth reviewing the Institute for the Study of War’s analysis of Russian hybrid warfare. Over the last three years, opponents of assistance for Ukraine have given various justifications for their position, but I have suspected from the beginning that the real reason is sympathy for Russian ideology and actions. Recent events have made this obvious.

Worldwide, Christian nationalists in particular support Russian militarism out of the belief that Russia is a Christian nation, and warfare will establish a kind of caliphate to overthrow that they see as the corrupt Western order. Many pieces debunk this notion, such as Stradner (2023), Webb (2022) from a Calvinist perspective, and Warsaw (2024) from a Catholic perspective.